Google is making its search engine smarter

What you need to know

- Google is employing its machine learning chops to make search smarter.

- The search engine will better understand conversational language beyond just keywords.

- The company says it's one of the biggest leaps forward in search.

Google's Pandu Nayak took to its blog this week to announce what he says is "the biggest leap forward [in search] in the past five years, and one of the biggest leaps forward in the history of Search." The update has to do with how Google Search processes your queries.

Nayak points to people's use of what he calls 'keyword-ese' when searching on Google because of the search engine's inability to understand natural language employing the use of qualifiers such as 'from' and 'to.' Indeed, the way search currently works, the service basically ignores such terms and tends to focus solely on the keywords it gleans from your query.

That's soon going to change — at least for English queries in the U.S., with more language and locales to follow later. Combining the latest advances in neural networks for natural language processing and specialized hardware, Google claims it can now understand the context of each word in a query much better in relation to the entire search phrase. Ultimately, that means it can finally start to understand the significance of essential qualifiers like 'to' and 'from' in a sentence, instead of ignoring them entirely.

All this is possible thanks to BERT — no, not the geologist from Thie Big Bang Theory but, rather, Bidirectional Encoder Representations from Transformers — which is Google's technique for training neural networks to understand words in relation to the entire phrase. Previously, the search giant's algorithms tended to exegete search terms in a largely linear fashion, understanding one word only in relation to the ones next to it.

On the hardware side of things, Google's also enabling the use of cloud TPUs — basically, chips made for the sole purpose of training AI on neural networks — for search queries for the first time in order to better handle all the algorithmic complexity BERT is cooking up.

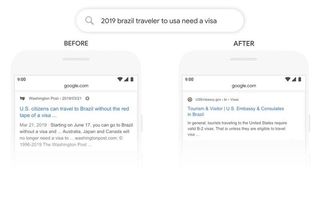

The end result of all that toil? Google provides the above example for how BERT can meaningfully improve search results, and allow you to communicate with the search engine more naturally. Thanks to its better understanding of the preposition 'to' in the query, Google now knows that the user is a Brazilian national and is looking to travel to the U.S. The older, less sophisticated variant of Google Search would understand the exact opposite, and fetched information pertaining U.S. citizens wanting to travel to Brazil.

It's a subtle difference, but it means the world. And Google plans to bring these improvements to the rest of the world. Thanks to how neural networks work, Nayak says many of the things they've learned from using BERT on English can actually be applied to other languages. Google is already testing small snippets of BERT-powered search for Korean, Hindi, and Portuguese, and it's seeing significant improvements.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Google's search results page is getting a tiny splash of color